An exciting new development in the progress of Midgard, a novel re-envisioning of the virtual memory abstraction ubiquitous to computer systems, sees a tech leader funding research that will bring together experts from Yale, the University of Edinburgh and EcoCloud at EPFL.

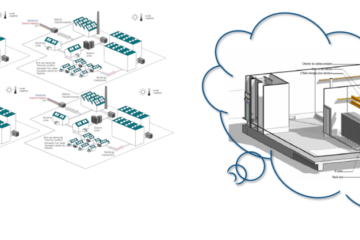

Global semiconductor manufacturer Intel is sponsoring an EcoCloud-led project entitled “Virtual Memory for Post-Moore Servers”, which is part of its wider research into improving power performance and total cost of ownership for servers in big-scale datacenters.

What is the Post-Moore era?

Moore’s law was conceived by Gordon Moore in 1965. He would later become CEO of Intel. Moore predicted that the number of transistors in a dense integrated circuit would double, roughly every two years. This has been remarkably accurate up to now, but we are reaching the stage where physical limitations will curtail this pattern within the next couple of years: we are approaching the “Post-Moore era”. Many observers are optimistic about the continuation of technological progress in a variety of other areas, including new chip architectures, quantum computing and artificial intelligence.

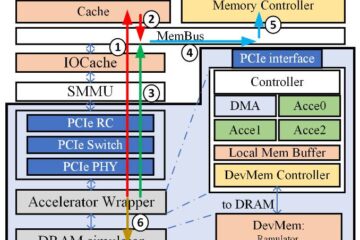

Midgard is a radical new technology which helps provide optimizations for memory in data centers with an innovative, highly efficient namespace for access and protection control. Efficient memory protection is a foundation for virtualization, confidential computing, use of accelerators, and emerging computing paradigms such as serverless computing.

The Midgard layer is an intermediate stratum that renders possible staggering gains in performance for data servers as memory grows, and which is compatible with modern operating systems such as Linux, Android, macOS and Windows.

The project Virtual Memory for Post-Moore Servers aims to disrupt traditional server technology, targeting full-stack evaluation and hardware/software co-design, based on Midgard’s radical approach to virtual memory.